Project LifeLike is a collaborative research project funded by the National Science Foundation from 2007. It aims to create a more natural computer interface in the form of a virtual human. A user can talk to an avatar to manipulate accompanying external application or retrieve specific domain knowledge.

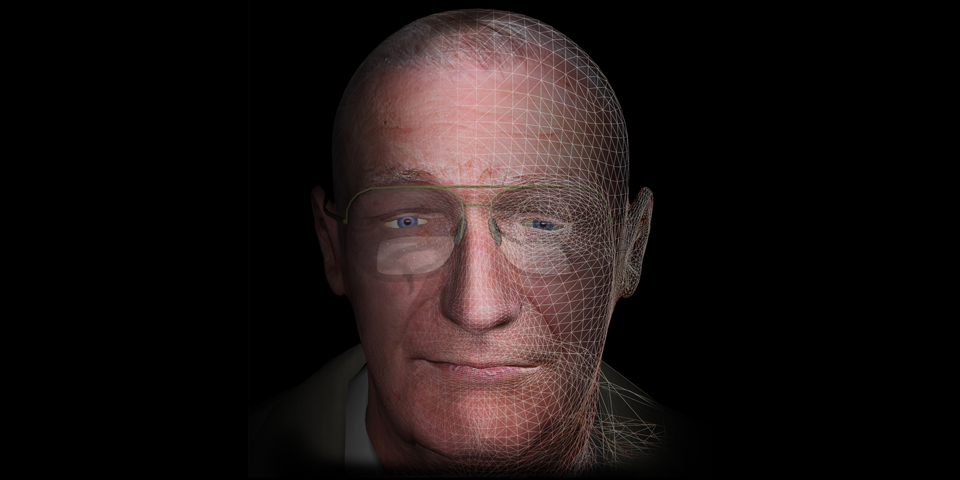

Role: I have been in charge of all visualization part including visualization framework, character modeling, animation, and expressions for real-time interaction. The main framework is implemented in C++ with Object-Oriented Rendering Engine (OGRE) and external behavior control is implemented via Python script (boost c++ Python binding). I use blendshape morphing to control facial expression in real-time. Various custom GPU shaders are also implemented to enhance visual quality. Character modeling, texturing, and rigging is done in Maya and Animation is acquired via Vicon Motion Capture system and Motionbuilder SW.

Project Video Documentation (4:27)

Over the course of this research project, I have tested and implemented various possibilities with respect to human-computer interaction and user experience. The following series of videos show some of those results.

[FFT Lip-Sync]

While I am developing an interactive virtual human as a computer interface, we need to use verbal communication primarily as we do in everyday communication with other. For this purpose, there are two possible methods available. One is to use a computer synthesized voice (Text-To-Speech) and pre-recorded voice. In the case of TTS, we can utilize phoneme & viseme information from TTS engine to drive character lip animation. For the recorded voice, there is no such info coupled with wave file. An alternative we can think of is to analyze waveform on the fly to extract certain characteristics of human voice and matching lip motion. There are several distinct frequency bands tied to some of the dominant lip movement. Therefore, I implemented FFT analyzer using bass audio library and use frequency band and its power (level) to manipulate lip motion. Below video illustrate its results.

[Reflective Material]

Our first target subject to digitize in a virtual world was very old senior wearing glasses. Glass itself does have a very interesting element in graphics. It’s reflective. At the very beginning of this project, I used simple semi-transparent material for glasses however, it is not as fun as real-life physical features. So, I started to elaborate some interesting ideas for this rendering scheme. The first few thoughts were to incorporate its physical shape. It’s not flat. It has slight curvature on the surface so we better to use some calculation to represent this, simple refraction. Then, added a few more multi-texturing techniques to mimic dirt and specs on glasses. Finally, to blend real world and the virtual world I came up with an idea to reflect a real world into virtual space. I implemented a webcam video streaming for dynamic texture update for glass material. This is one of the favorite features we recognized from many users. “Oh, I can see me there, on glasses!!!” Yes, it helps to better engage a user in interaction with a virtual character.

[Face Tracking]

When we think about our daily communication with others, face-to-face communication, it is important to realize much profound nature of human behavior. One of such characteristics is eye contact or eye gaze. Since we use a large display such as 55″ screen to represent life-size virtual human, a user experience pretty much same level of interaction as with a real person in front of him/her. While a user moves around or shows hand gestures, it is very likely for our character to show some attention to that. To implement such capability, the first thing is to track user’s behavior and movement. As we already have a live feed of webcam video, it is very feasible to add some extra features from it. I implemented the facial feature and movement detection with OpenCV library and previously developed webcam video module. I used multi-threading for video analysis so that we do not sacrifice frame rate for real-time renderings with a multi-core machine. Developed module support multi-face detection and grid-based movement detection. The face location then feeds into character behavior routine to drive eye gaze in normal mode. Detected movement grid is aka area of interest to supplement temporary eye gaze variation, which we assume a user points to some direction or wants to show some kind of gesture. Our character naturally corresponds to such aux information. Below video shows facial and movement detection results using webcam video on the fly.

[HD Video Player Integration]

We consider our LifeLike research framework as rich environments to interact with and show various information to a user. In the early stage, I made a web interface to our main rendering framework to mainly show various textual information and some online videos. However, a control over such web-rendering module is not enough. For instance, when we show YouTube video, I do not have much control to detect the start/end of video playback or interrupt it in the middle, and etc. Another possibility I designed and implemented is to use media files directly instead of the web module. I made a dynamic video texturing interface to our main framework to enable a richer set of controls. Underlying techniques are similar to webcam video feed module expect we use video library instead of streaming. I used Theora video library to extract video frame data and feed it to dynamic material manager in our rendering module. All video playback module is also multi-threaded not to interrupt fast rendering loop. Below video shows three HD video playback in our current framework.

[Astronaut App on Large Display Wall]

One of the nicest thing being in such nice research institution is lots of leading-edge resources available. One facility we have in the lab for a while is a large-scale display wall, so-called cyber-commons. We use this for various activities such as new classroom environments, remove collaborations, and many other types of research projects. The earlier version of this system has been deployed in many venues and Chicago Adler Planetarium is one of those. Adler is one of our lab’s good collaborators and I also had opportunities to work with them. They have a similar display system from our tech outreach and are running high-resolution nebular image viewer for visitors, which is also developed by one of our former students. The idea here is to have a virtual tour guide for the galaxy. Different from conventional museum installations, this system is interactive. A user can control image viewer and see very high-resolution details, however, we generally do not have a good sense of what this image is since we are not an expert in this domain. Right there, our virtual character can help to explain images and guide through the much deeper universe. This is one good example of my research project outreach. Below video illustrates this prototype application. A user can talk to an astronaut tour guide and ask questions regarding images on the display. My framework is capable of understanding user speech (speech recognition) and responds to it via synthesized voice from written rich knowledge. The implemented system runs along with external image viewer and contextualizes information based on the current view of the image on the screen.

[Hair Simulation on GPU]

Here is another interesting graphical feature I developed. It is a simple hair simulation on GPU. When I made a female character for another deployment, one thing bothered me a bit was her hair. Female generally has longer hair than man and looking at the rigid motion of such soft part of model does not give realistic feeling at the very first glance. So, I started to think about easy and quick hack to walk around this instead of running full hair simulation, which is not suitable for a real-time interactive application. It is a very simple bounce back model without calculating real-world spring model. While the external force is detected (i.e wind or head movement), vertices on hair mesh model change its position slightly in the same direction as the force. Imagine you hold a long string and move your hand round, what happens is the root of the string you are holding follows your finger position immediately while the other end slowly follows due to the fraction of the air and time to transfer the force from root to the end. Sort of… Just simple idea. Such varied motion characteristics are implemented by utilizing UV texture coordinate for the hair patch model. Assume vertices around the root of hair strings have higher V value and move quickly. The end of the string is opposite case. Below video shows this simple simulation result. All simulation is implemented on GPU so no worry for the performance.